December 31, 2008

| Inventory of 2008 MR events and books

What follows is a list, as complete as I can manage to put together, of MR events (meetings, symposia, conferences, courses, etc.) which took place during 2008 and the number of books which were published during the year. Included are also the respective partial counts for 2009 based on confirmed data available at this moment.

| Events: | 2008 | Notes: | slated for 2009 |

| NMR | 64 | Includes all but medical imaging | 15 |

| ESR | 10 | EPR holds steady | 4 |

| MRI | 32 | Some of these meetings are huge | 7 |

| Related | 33 | This category is a bit incomplete | 11 |

| Total | 139 | Up by almost 18%; one every 2.6 days! | 37 |

| Books: | 2008 | Comparison with 2007 | slated for 2009 |

| NMR | 21 | up from 17 | 7 |

| ESR | 3 | keeps steady | 3 |

| MRI | 43 | up from 29 | 13 |

| Total | 67 | Up by almost 40% | 23 |

Note: The book counts are not quite definitive since some books expected to leave the presses at the end of 2008 may be delayed and appear only in 2009.

I thank all those who have let me know about forthcoming events and newly published books. Announcements of events and books on this site are free of any charge and constitute both a promotional vehicle and a service to the MR community.

|

December 21, 2008

DOI Link

| RMN: Appunti di Lezione

[An Italian-language educational online text on NMR Spectroscopy]

Tra le mie recenti attività editoriali ho deciso di pubblicare anche un opuscolo scritto oltre 15 anni fa da Sandro Gambaro, un mio amico di vecchia data che faceva ottimo NMR all'Istituto di Chimica Fisica dell'Università di Padova. Sandro purtroppo non è più con noi, ma il suo testo mi sembra tuttora ottimo per fornire le prime nozioni non superficiali di spettroscopia NMR agli studenti di chimica.

L'opuscolo consta di 110 pagine (compresa una paginetta di prologo che riassume alcuni miei ricordi di Sandro) ed è redatto in formato PDF cui pagine sono per lo più scansioni dell'originale cartaceo.

|

December 18, 2008

| Online Repository of Magnetic Resonance Theses

In my attempt to collect links to educational NMR/MRI texts, I became aware of the huge amount of good quality educational material contained in doctoral Theses (dissertations). Having been written by relative newcomers to MR, the introductory chapters of Theses are often suitable as starters. The inner cores may range from decent to brilliant, but there is always something interesting to learn and there are Theses which qualify as good-quality books. Finally, the reference lists are often well researched, titled and exhaustive, which makes them very valuable. For these reasons, I have started collecting links to MR Theses available on the Internet. They constitute three distinct Sections of the new web page entitled Magnetic Resonance Online Texts, dedicated respectively to NMR/ESR, MRI, and MR-related areas.

I invite Authors of Theses which are already available on the Net to let me know the links, so I can insert them. In addition, if you would like to make your Thesis public but do not know where or how, let me know and I will be glad to host it for free on this site (the same applies to books, lecture notes and courses). If you are willing to pay $5 for an optional registration, I can also assign a DOI number to your document (a DOI, apart from establishing a clear copyright base, makes citing a document much simpler and more professional).

I am aware that the initiative might raise some copyright questions. There is a universal consensus that the primary copyright to a Thesis rests forever with its Author. Beyond that, Institutions adopt all kinds of attitudes: some apply an additional copyright of their own, while others either leave the question open or explicitly renounce any rights of their own. My policy is to simply link to anything that is already in the public domain (assuming that the linked-to material is legal), and respect the Author's will for any material yet to be published. In the latter case, it is up to the Author to check with his/her Institution whether there might be any objection to making a Thesis public.

|

December 6, 2008

| A Basic Guide to NMR by Jim Shoolery, 3rd Edition

The booklet A Basic Guide to NMR by Jim Shoolery (87 pages) has been an important entrance gate to NMR spectroscopy for many chemists starting their carriers in the 70's. Originally published by Varian in 1972 and in 1978, it has been used by the Company as an educational handout. It included the basics of organic-chemistry NMR spectroscopy as well as many "tricks and tips" on how to operate CW NMR spectrometers. The book, though widely quoted in NMR literature, has become practically unavailable, which is a pity because it has both a historic value and - despite all those years - many educational merits.

For this reason, I have now published the 3rd edition of the book in Stan's Library and made it freely available on the Net in scanned PDF format. Legally, this has been made possible through the courtesy of Varian Inc and a hearty endorsement by the Author, testified by this excerpt from his wife Judith's e-mail of November 17:

Dear Stan, I enjoyed hearing from you again ...

Jim is very glad that you want to reprint his early application report "A Basic Guide to NMR" from 1972. You have his permission, and he gives it with pleasure. He would enjoy having a note when you have finished posting it. We have no way of including his signature, but please be assured that he is aware of your request and heartily endorses it.

With warm good wishes, Jim and Judy Shoolery

I have taken the liberty to write a brief Preface to the new Edition. It clarifies the present-day merits and limits of the Opus and summarizes (no doubt clumsily) the historic role of its Author.

I have also assigned it a DOI: 10.3247/SL2Nmr08.012.

|

November 15, 2008

More on FFT/DFT:

DFT Math,

a free online book

by J.O.Smith III

| FFT and the Cold War

Reading one of the free online books made available by MSRI (Mathematical Sciences Research Institute), I came across a historical pearl which merits our attention since it regards the famous Fast Digital Fourier Transform algorithm of Cooley-Tuckey [An algorithm for machine calculation of complex Fourier series, Math.Comp.19, 297-301, 1965] without which modern magnetic resonance of almost any kind could hardly exist. It links the birth of the algorithm directly to the cold-war nuclear-weapons treaties !!!

The following excerpt is part of an essay on The Cooley-Tukey FFT and Group Theory written by David.K.Maslen and Daniel N.Rockmore. It appears on pages 284-285 of Volume 46 of the MSRI series which is dedicated to Modern Signal Processing (edited by D.N.Rockmore and D.M.Healy, Jr.).

... The story of Cooley and Tukey's collaboration is an interesting one. Tukey arrived at the basic reduction while in a meeting of President Kennedy's Science Advisory Committee where among the topics of discussions were techniques for off-shore detection of nuclear tests in the Soviet Union. Ratification of a proposed United States/Soviet Union nuclear test ban depended upon the development of a method for detecting the tests without actually visiting the Soviet nuclear facilities. One idea required the analysis of seismological time series obtained from off-shore seismometers, the length and number of which would require fast algorithms for computing the DFT. Other possible applications to national security included the long-range acoustic detection of nuclear submarines.

R.Garwin of IBM was another of the participants at this meeting and when Tukey showed him this idea Garwin immediately saw a wide range of potential applicability and quickly set to getting this algorithm implemented. Garwin was directed to Cooley, and, needing to hide the national security issues, told Cooley that he wanted the code for another problem of interest: the determination of the periodicities of the spin orientations in a 3-D crystal of He3. Cooley had other projects going on, and only after quite a lot of prodding did he sit down to program the "Cooley-Tukey" FFT. In short order, Cooley and Tukey prepared their paper, which, for a mathematics/computer science paper, was published almost instantaneously - in six months!. This publication, Garwin's fervent proselytizing, as well as the new flood of data available from recently developed fast analog-to-digital converters, did much to help call attention to the existence of this apparently new fast and useful algorithm. ...

For me, these sentences were an eye-opener since in 1963 I had my own little nerve-raking brush with the Soviet-block nuclear establishment and, a few years later, I started using FFT on an almost daily basis. Until today, however, I never suspected that there might be any connection between the two things! Funny how small this world really is and how true it is that wars, deplorable as they are, sharpen the acumen of those involved.

The acumen of true giants, however, does not need any sharpening. You might have noticed that the above excerpt refers to the digital FFT as an "apparently new" algorithm. This is because (as the Authors point out on page 283) the great Johann Carl Friedrich Gauss already had all its ingredients in place and was even putting it to practical use in manual calculations some 120 years before it was re-discovered!

An afterthought: Before somebody raises the question, let it be clear that FFT (Fast Fourier Transform) and DFT (Digital Fourier Transform) are the same thing, while FT (Fourier Transform) is more general. FT can be either discrete (digital) or continuous (analytic) but only the digital version can be handled by computers.

|

November 8, 2008

Schematic

illustrations

(click to enlarge)

A doublet at

various S/N

S/N = 1:1

Useless!

S/N = 10:1

Sufficient

S/N = 100:1

Too good!

Simulated by

Mnova

| A simple economy model for MR services

Prompted by a friend's query, I was thinking about an objective way to estimate the optimal amount of the precious spectrometer/scanner time which should be dedicated to a noisy sample or to a medical image. Clearly, there are some key features (spectral lines, anatomical details, T1 fitting error, ...) in the product (f-domain spectrum, image, t-domain experiment) which need to be brought in evidence. If the weakest of these features has an insufficient initial S/N ratio of ηi, an accumulation is called for. The accumulation is done in multiples of some basic data unit. The meaning of the latter term depends upon the experiment to be performed - it might be a single scan, a complete phase cycle, the minimum of scans needed to sample a 2D k-space array, the set of IR scans describing a desired τ-dependence, etc. Now the question is: knowing ηi, plus some economic parameters, what is the optimal number n of data units one should collect.

It is evident that there is indeed an optimal value of n. When n is too small (such as n=1) we have data with insufficient quality, and those are as good to us as having no data at all (zero benefit). On the other hand, once the S/N of the weakest key feature has reached something like 10, it is unlikely that further accumulation might unravel more info than what is already available. To continue would be a waste of time and resources!

In order to model the situation, I first propose a benefit curve B(η) as a function of the S/N ratio η. Clearly, below some minimum S/N level ηm (such as 1), the data are unusable, so that B(η) should be zero. Above this threshold B(η) should increase sharply until η reaches whatever is considered a sufficient S/N level ηs (such as 10), beyond which it should level and stay constant at some benefit value "b" associated with a successful top-quality measurement. I believe that any smooth B(η) curve satisfying these criteria can do, in the sense that its exact shape is of little importance. A suitable functional form of B(η) might be, for example, this one:

B(η) = b.max[0, 1-exp(-2[η-ηm]/ηs)].

When we acquire n data units, the initial S/N ratio ηi gets boosted by √n, so that the final post-accumulation benefit can be expressed in terms of data units as

B'(n) = B(ηi√n);

It is easier to evaluate the cost C(n) associated with the acquisition of n data units. There is a basic cost "p" associated with sample/patient preparation and with equipment setup, and an average running cost "c" associated with a single data unit (typically, "c" is the product of the time needed to acquire one data unit and the running cost of the installation per unit time). Hence C(n) = c.n + p.

Having expressed both the benefit and the cost in the same economic units, we can define the post-accumulation benefit/cost ratio R(n):

R(n) = B'(n)/C(n) = B(ηi√n)/C(n).

The benefit/cost ratio R(n) stays zero until n exceeds (ηm/ηi)^2. It then grows towards a maximum at n slightly beyond (ηs/ηi)^2 and decreases afterwards. One can use numeric methods to look for the best value N of n which maximizes R(n) and then calculate the expected total cost C(N) and the corresponding optimal benefit/cost ratio R(N) in order to find out whether it makes sense to start the measurement at all. Should R(N) be smaller than unity, the essay of the sample could never be economically viable.

A rule-of-the thumb analysis of the premises gives these approximate equations:

N = (ηs/ηi)2, R(N) = b/[c.N + p],

showing that the accounting depends completely upon the ratio ηs/ηi and upon the three economic parameters b, c, p. Of these, the one most difficult to assess is the maximum benefit "b" which is well-defined in purely commercial environments, but becomes quite a bit subjective in the academy ("my experiment will pave the way to a Nobel prize") or in clinical settings ("this essay is crucial for the patient's survival"). Nevertheless, there almost always exists a broader frame which makes it possible to assign a reasonable objective benefit value to an average MR essay. Notice also that the optimal number N of data units depends approximately just on ηs/ηi and not upon the three economic parameters. Consequently, it can be estimated prior to pondering the costs and benefits.

Example: A self-financing NMR service lab charges $100 for a simple proton or carbon spectrum. When running the experiment, they routinely acquire a multiple of four scans (dictated by a phase cycle) and the basic data unit of 4 scans takes 0.8 minutes of acquisition time. They accept only sealed samples but nevertheless estimate at $10 the combined costs of sample handling (registering, labelling, ...) and of the instrument setup. The estimated running costs of the spectrometer (including investment depreciation) are $300 a day ($0.21 a minute). What is the minimum single-scan S/N ratio to turn a profit?

Solution:

We have b = 100, c = 0.8*0.21 = 0.168, p = 10, and we assume that ηs = 10 and that the spectrometer is operated 24 hours a day. To turn even, the cost C(N) must be approximately equal to b. Hence N must be smaller than about (b-p)/c which evaluates to 536 units of 4 scans (a total of 2144 scans). From this it follows further that ηi must be at least 10/√536 which gives 0.43 for the minimum S/N ratio in a 4-scans unit, corresponding to S/N of 0.215 in a single scan.

In the particular case I have encountered, the lab manager used the known spectrometer sensitivity to actually convert these results into minimum molarity requirements (separately for 1H and 13C) and multiplied the result by a "safety" factor of 2 to allow for spectral peak splittings and also to make sure that the lab actually does turn some profit.

|

October 25, 2008

| Nuovi testi didattici di Spettroscopia NMR

[New Italian-language educational online texts on NMR Spectroscopy]

Stefano Chimichi è un Professore al Dipartimento di Chimica Organica "Ugo Schiff" dell'Università di Firenze, ma molti di noi lo conoscono soprattutto come un appassionato cultore della Spettroscopia NMR, membro del GIDRM (Gruppo Italiano di Risonanza Magnetica) e l'instancabile organizzatore della Scuola di NMR che si svolge ogni anno a Torino sotto l'egide del GIDRM.

Stefano ha ora redatto due dei suoi Corsi in formato pdf e deciso di renderli liberamente disponibili sul suo sito. Si tratta di due voluminosi documenti dedicati ai parameteri degli spettri NMR ad alta risoluzione. Il primo (intitolato Il chemical shift) consta di 96 pagine, mentre l'altro (intitolato L'accoppiamento indiretto spin-spin) ne contiene addirittura 127. I due testi sono abbondantemente illustrati e scritti in modo da rendere facile la loro lettura e comprensione, mettendo in evidenza la profonda esperienza didattica dell'Autore.

Grazie, Stefano!

|

October 15, 2008

LEARN MORE!

| What spin does a nucleus take

Bijit Bhowmik is asking:

Sir, I am student of chemistry. While reading NMR I am facing a trouble. Can you please tell me why some of the nuclei take I = 3/2 or 5/2 while some others take 1/2?

Regards, Bijit.

Dear Bijit, yours is a very good question indeed.

In principle, a nuclide and all its [ground-state] properties are fully defined by its atomic number Z (number of protons) and its mass number A (total number of nucleons). In other words, if we take two nuclides for which those two numbers coincide, there is no known experiment which might permit us to tell one from the other. However, this knowledge does not help us much to actually compute the nuclide's properties such as spin, magnetic dipole moment and electric quadrupole moment. Nuclear physics theories are not yet up to the task, so these properties are presently accessible only experimentally (except in a few cases where decent approximate solutions exist).

Nevertheless, there are some fundamental quantum constraints and some empirical rules on what spin value may a nuclide "take", as you say.

A very fundamental quantum constraint says that the value of the spin (I) which defines the nuclide's intrinsic and immutable angular momentum hI/2π, h being the Planck constant, must be a half-integer. So nuclides (or any physical objects, for that matter) can have only spin I = 0, 1/2, 1, 3/2, 2, ... What is the actual spin of any given nuclide depends on how the nucleons (protons and neutrons) which compose it are coupled with each other and also what orbital momenta they possess in the nuclides' ground state.

Among the stable and long-lived nuclides (SLL) we find most of the allowed spin values between 0 and 14/2. If we denote as N the number of neutrons in a nuclide (N = A-Z) and classify the nuclides according to whether the numbers Z and N are both even (class EE) or both odd (class OO) or one even and one odd (the mixed classes EO+OE), we find out that:

- Most SLL nuclides (155 of them) belong to the EE class and have spin 0. Consequently, they have also zero angular momentum and zero magnetic and quadrupole moments. They are invisible to NMR.

-

Only 8 SLL nuclides belong to the class OO and they have exclusively integer spins:

2H (I=1), 6Li (I=1), 14N (I=1), 10B (I=3),

40K (I=4), 138La (I=5), 50V (I=6), 176Lu (I=7).

These include the three nuclides with the highest spin values.

Notice that there are no SLL nuclides with spin 2.

-

111 SLL nuclides are divided more or less equally between the two mixed classes.

Their spin values are all half-integer, distributed from 1/2 to 9/2 as follows:

| Spin | Number of SLL nuclides | Note |

| 1/2 | xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx 31 | Quadrupole moment = 0 |

| 3/2 | xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx 32 | |

| 5/2 | xxxxxxxxxxxxxxxxxxxxx 21 | |

| 7/2 | xxxxxxxxxxxxxxxxxxx 19 | |

| 9/2 | xxxxxxxx 8 | |

These empirical rules represent important hints for theoretical nuclear physicists, such as the existence, inside a nucleus, of a strong association of identical nucleons into pairs with opposite, mutually cancelling spins. Unfortunately, the hints are not enough to develop a theory which would permit a sufficiently reliable a-priori calculation of a nuclide's spin from the two numbers [Z,A]. Much less are we able to compute precisely the real-valued properties of nuclides like magnetic and quadrupole moments which, by the way, are also subject to a number of empirical rules.

Stan, Addendum of Oct.17, 2008 ----------

Definition of long-lived nuclides: those that were born before the Earth took shape and which still exist in measurable quantities in the Earth crust.

The apparently only nuclide with spin 2 is 204Tl which, however, is not SLL since it is unstable with a decay half-time of 3.56 years.

It is also interesting to notice that there are only three naturally occuring elements which have no SLL isotope with a non-zero spin and therefore can never become objects of NMR studies. These are Ar (argon), Ce (cerium), and Th (thorium).

In addition, the radioactive elements Rn (radon) and Ra (radium), which are not long-lived but are present in Nature in traces as by-products of the decays of radioactive SLL nuclides, have each just one isotope whose spin is 0. They are therefore also totally inaccessible to NMR, regardless of what might be the available quantities.

|

September 30, 2008

| Chemical shifts of naked and dressed protons

I have received the following query:

I am a physicist in Japan, working with solid NMR on quantum magnets.

Let me ask a question about proton moment. Shielded proton moment and gyromagnetic ratio are given in CODATA. These values are measured for water. Is there detailed data of chemical shift of water in the same condition?

Thank you in advance. Tetsuo Ohama, Chiba University, Japan

Dear Tetsuo. You catch me a bit unprepared but I will try to do my best. Yes, CODATA sets the proton magnetic shielding correction at 25.694 ppm with an error of 0.014. This is the relative amount of magnetic-field shielding σ between a "naked" proton and one in bulk water under standard conditions (25 °C, 100 kPa, triple-distilled and degassed sample with natural isotopic composition and pH of 7.0). There is no reason to doubt this value since it is quite easy to measure - possibly easier than to prepare the sample. Moreover, it has been experimentally estimated (27.0 ppm) and theoretically computed using ab-initio calculations (17.7 ppm) already in 1957 (McGarvey, J.Chem.Phys.27,p.68) even though today, knowing what a perverse compound water is [look here], these early results smack of the proverbial beginner's luck.

The first problem with water is that, due to its mobile (exchangeable) hydrogens and labile but complex local stereochemistry, its chemical shift is very dependent on its physical state. Chemists normally set the chemical shift δ of bulk water under standard conditions at 4.795 ppm with a reproducibility uncertainty of about 0.005 ppm. This is the chemical shift value referred to, as usual, tetramethylsilane (TMS). However, it is sufficient to consult any of the numerous tables of solvent chemical shifts [1.xls, 2, 3, ...] to notice that, for example, chemical shifts of traces of water present in other solvents can range from 0.4 (in benzene) to 4.9 (in pyridine). Physicists should have never used such an ill-defined compound as their shielding standard (TMS would have been perfect).

The second problem with water is that while the CODATA guys measure bulk H2O, chemists use deuterated solvents and thus measure only the residual protons in almost pure D2O. Under these conditions the dominant isotopic species is HDO which is shifted about 0.035 ppm upfield from H2O due to the isotopic chemical shift effect. There is a general rule which says that when one substitutes a nuclide in a chemical group with a heavier isotope then all other nuclides in the group become a bit more shielded (this has to do with an overall reduction in vibrational amplitudes). Consequently, the chemical shift of protons in standard bulk water should be 4.795 + 0.035 = 4.830 ppm, give or take 0.02 ppm. Of course, heavy water and normal water do not even have the same bulk properties (such as density and magnetic susceptibility) and this introduces a further uncertainty when trying to deduce the chemical shift of normal water from the data on HDO traces in D2O. I am sure that somebody has measured the chemical shift of normal water under all kinds of conditions but, as I said, I am a bit unprepared to pop out the references. Maybe a reader will illuminate us.

There is a third complication which, however, has nothing to do with water. It regards the fact that the shielding σ and the chemical shift δ point in opposite directions: the higher the chemical shift δ, the lower the shielding σ and vice versa (this convention is really just a kind of historic jest due to how the first CW instruments were operated).

All this puts the naked proton at 4.830+25.694 = 30.524 ppm and establishes something akin to an "absolute zero" for chemical shielding.

It is also interesting that protons of the hydrogen molecule have about the same shielding constant as those in bulk water, namely 26.2 ppm, confirmed both experimentally and theoretically already back in the fifties (Ramsey, Molecular Beams, p.235, Oxford Uni Press 1956). This means that if you measured traces of hydrogen dissolved in water, the H2 signal should appear very close to the water signal. But then, God only knows what kinds of interactions there are between hydrogen and water, while if you just bubbled hydrogen through the water and measured the gas in the bubbles (with water as reference) you would need to apply a correction for the difference in bulk susceptivities. Life is never easy when it comes to parts per million.

By the way, one often hears that hydrogen spectra are limited to a window of about 12 ppm. Which is more or less true for common organic molecules, but not for organometallic hydrides where the chemical shifts range from +25 to -60 ppm (see this Chapter in the free online book on organometallics by Rob Tereki). Which sets the width of the proton NMR window - from a naked proton to the most dressed up one - to about 90 ppm.

|

September 25, 2008

Part of

Know Thy Spins!

| Geometry of the AB quadruplet

I have written an article about the geometry of the quadruplet of spectral peaks corresponding to an isotropic, coupled AB spin system composed of spin-1/2 nuclides. To my knowledge, though the spectra of this simplest case of a coupled spin system are ubiquitous and well known, their simple geometric properties were never pointed out in print. I hope that the article might open what I would love to call recreational NMR (like the well established recreational Math).

The article also lists a number of properties of the iso(AB) spin system which are apparently trivial but, the way I see it, constitute bare-bones examples of much less trivial features of generic coupled spin systems. The reason for doing so is that I plan to write an online series of articles entitled Know Thy Spins. It will contain mostly educational essays, reference materials and simple software utilities, but occasionally I will bring up also unpublished rules. The article on the geometry of AB quadruplets is an example of what I have in mind, even though it opens the topic in the middle rather than at the beginning. But Internet is merciful and allows one to add stuff indefinitely at both ends and even in the middle ...

I hope to be able to add material to the series about twice a month (new additions will be announced on this blog).

|

September 19, 2008

2 Comments

| NMR data archivation and compression

I have read with interest a recent entry on Carlos Cobas' blog entitled SPECTRa, as well as the comment added by Old Swan. It prompted me to start writing a comment of mine, but it became a bit too long and a bit off-the-track, so I have decided to cast it as an article:

The problem of NMR data archivation is an old and complex one. I certainly understand and instinctively approve Carlos' desire to maintain permanently the possibility to return to the original experimental data, as well as Old Swan's decision to avoid introducing any iNMR-specific data format. In my old DOS-based NMR software which I used for Stelar data-system upgrades of Bruker, Varian and Jeol spectrometers in late 80's and early 90's, I have strongly insisted on the principle that "original data are sacred". But I must confess that I did create our own internal binary standard (possibly because the upgrades included proprietary Stelar acquisition hardware).

At that time I have also evaluated the JCAMP format - and rejected it because, as formats go, it was one of the worst. It is concerned with storing and compressing masses of data (otherwise, why should it exist at all) and yet it was born under the amusing a-priori requirement that the coded data should be readable by humans, albeit well trained in this difference-based coding method. As a result it is rather inefficient in compressing data (especially NMR data, and particularly the FID's) and, of course, nobody with a sane mind has ever tried to take advantage of its build-in readability. At the same time, it does not address at all many important archivation-specific issues.

When Stelar moved into NMR Relaxometry and to Windows-based software, I have decided to store all data in ASCII. This implies a data volume expansion rather than compression but, frankly, who cares? Mass storage costs are today a million times smaller than in the 80's and the devices occupy as much less room. Something else is the data protocol, of course, since even ASCII data necessarily need one (people often forget this). Again, I have invented my own, hoping that since the data files were plain text, people could easily inspect them and adapt to their structure whenever they needed to read them with their own or third-party software. Which they mostly did (with exceptions and/or occasional grumbling).

Once I have even tried to promote an XML-based archivation standard for NMR variable-field relaxometry, but the feedback on that exhortation/query was an exact ZERO.

So what do I think about NMR data storage and archivation after these experiences? First, that it is indeed a nuisance to have so many manufacturer-dependent native formats. Second, that apparently nobody cares! Neither the manufacturers, nor the NMR end-Users. The only ones who complain are companies producing off-line data-evaluation software (like Carlos' Mestrelab) and, occasionally, an academic guy/gal trying to do some data evaluation of his/her own. Third, while uniformity of formats is a real issue, data compression is almost irrelevant except, maybe, for three- and higher-dimensional data (but even that may change in a few years as we approach the $1 per TeraByte region).

Finally, archivation solutions may very much depend upon the type of the archivation client. Academic institutions may have totally different requirements than, say, pharmaceutical industries. If you archive, for example, 100000 NMR spectra a year, uniformity of the archive is a must and the very idea that one of your chemists might ever return to one of the spectra and re-evaluate it from the start is an absurdity. Such a Client wants a catalog of "final" spectra, all evaluated in the same way. A single case evaluated in a different way would constitute a nuisance, even if the second evaluation were much superior! So, as much as I understand the holy status of the original data, I am sure that there are environments where this argument has no weight.

In this context, it is interesting to note what happened in the medical imaging field (including MRI) where, for obvious reasons, archivation is of utmost importance. There the manufacturers associated and developed the DICOM standard which can be used to archive the final images and, optionally, also the original data. In practice, the latter feature is rarely used. In fact, a re-evaluation might imply a doubt about the quality of the first processing (which is a no-no in any clinical environment), and a non-standard, more advanced processing might raise issues which the MRI department is not ready to address. Again, archive uniformity turns up to be more important than a possible loss of potentially recoverable details.

Another archivation problem I see is that in NMR Spectroscopy the structure of the data is presently undergoing a revolution. We are in the middle of an ongoing re-assessment of novel data acquisition schemes, such as sparse sampling, partially random sampling, pseudo-random sampling, multi-rate sampling, phase-multiplexed acquisition (instead of standard phase-cycling), etc. All this will probably take a decade before it is fully explored and sorted out and it is possible that, at the end, the structure of typical NMR data will be quite different from what we are currently used to. Clearly, any data-standardization proposal made today should take these developments into account.

To wrap it up, I simply do not see enough concern about data standardization and archivation in any NMR area except clinical MRI. Consequently, I do not expect the current messy situation to change any soon. Even if all NMR software Companies teamed up to do something about it (an unlikely event), they have no influence at all on the NMR manufacturers who will never team up to do anything together unless badly pressed by their end Users. And the end Users are by and large happy enough if their singlets and multiplets and cross peaks turn up where they expect them.

COMMENTS:

19 Sep 2008: Carlos Cobas, (Mestrelab Research)

I have read your last entry with great interest. I agree with most of your comments though I'd like to mention that in my opinion, there are some potentially useful applications of NMR data compression procedures (I mean, real data compression algorithms, not those described in JCAMP format which are basically a joke). Namely:

# Data transmission: there are many situations in which an NMRist wants to send via email a large 2D spectrum to some 'client' for a fast inspection. Sending a 2D spectrum with a size larger than 16 MB is not a good idea (though there exist better ways for sending large files)

# Even though the original FID should be retained, it could be beneficial to compress the final 2D/3D spectrum which is usually much larger (because of zero filling, linear prediction, etc).

# A potential application of the compression of nD spectra could be to speed up some processing operations. For example, indirect covariance NMR involves multiplication of several 2D spectra. Standard multiplication of large 2D matrices could be quite time consuming (e.g. 30-40 s). There exist some advanced methods for matrix multiplication such as multiplication of sparse matrices, etc ... but a possible approach (which I have not tried yet btw) could be the multiplication of spectra in the 'compressed domain'. This should speed up multiplication by several orders of magnitude, I think. I'm sure that there are many processing algorithms which could take advantage of this compressed domain scheme.

# Embedding NMR spectra into other applications (e.g. MS Word, PowerPoint) using e.g. OLE/COM technology which makes it possible to edit the object (spectrum) with the original application (e.g. Mnova).

I know that DICOM allows the compression of images by JPEG & JPEG2000 algorithms, though radiologists have always been very reluctant to accept lossy compression schemes.

Just my 2 cents

20 Sep 2008: Stan

Hi Carlos. I did not think about some of your points, particularly the one about data embedding.

Yes, there will always be situations where compression is useful (just like generic file compression). In my original piece, I just wanted to point out that in data archivation compression it is not as important as we often tend to think, while there is a number of other requirements, some of which are User specific.

For communication, I have mixed feelings. Today, probably the best way to exchange voluminous data is via FTP (one can exchange up to 1 Gbyte in a few minutes). In any case, even the largest NMR spectrum does not beat a 2h video and yet transfer of videos is nowadays quite common. The communication channels themselves transparently compress and decompress the data (whatever they are) using their own techniques, and I wonder whether this is not the best way to handle the communication bandwidth problem.

I find most interesting your third point. I have so far never seen a numeric algorithm which operates directly on compressed data, but I imagine that it might be possible. In a sense, sparse-matrix algorithms do something of the kind, but what you have in mind is different.

|

September 15, 2008

Renzo Bazzo

Congratulations!

AnnaLaura Segre

In memoriam

| Glimpses of the Italian XXXVIII GIDRM meeting

GIDRM (Gruppo Italiano di Risonanza Magnetica) is an informal Italian NMR discussion group with a very long tradition. Its about 120 members mostly know each other (though it takes time for the older ones like me to remember the many new recruits). It organizes an annual meeting in various places in Italy and an NMR School (always in Torino) which alternates between Basic and Advanced.

Since when I settled in Italy in 1975, I love to take part of the annual meetings. They somehow talk to my heart and, in a long run, they represent an interesting window on the Italian Science. For example, consider the fact that at the 1975 meeting I, as a novel Bruker representative, have offered to sponsor a GIDRM prize for the best paper written by a young GIDRM member who would propose a new pulse sequence (at that time you could count the number of known pulse sequences on the fingers of your hands). To my dismay, the proposal was turned down as an attempt by an International Corporation to take over the people's assembly (consider that Bruker had at that time about 300 employees worldwide, of which just two in Italy - me and a secretary). There was just one man (Giovanni Giacometti) who defended me, but that only further inflamed the political debate. Today, a similar proposal would be gratefully accepted and the Sponsor would be given a high and honorable visibility. To be fair, I must add that all those who attacked my proposal became soon my friends and never took the episode personally. Typical Italians!

The Group went through several crucial moments. To me, the most important ones appear to be the following (not necessarily in chronological order):

(a) The decision to keep the group informal, with very low member quotas, and low registration fees for the meetings.

(b) The decision to hold the meetings in English and to give preference to young people, often at their first public English-language presentation, counterbalancing this by a number of invited talks by international experts.

(c) The internal "civil war" among NMR-ists and EPR-ists in mid 80's. The latter have in the end pulled out and formed a separate group (GIRSE, Gruppo Italiano di Risonanza di Spin Elettronico).

(d) The decision, several years ago, to turn down the offer of AMPERE Groupement to become a member. Unlike most other European NMR Discussion Groups, we have voted in favor of maintaining the informal, low profile, national character.

Worth mentioning is also the fact that at some point in the 80's there was an attempt to expand and cover also MRI, but it never really worked out and lasted only a few years.

The most recent meeting (Sept.10-13, 2008) was held in the Alpine resort town Bressanone (Brixen in German; the region is bi-lingual). There were about 85 participants; a bit low, perhaps reflecting the fact that the location is very peripheral, making it a bit difficult to reach for would-be participants from Southern Italy. On the other hand, University of Padova has there a nice structure most suitable for the purpose and available at nearly no cost (thanks).

The meeting included a MiniSymposium dedicated to the memory of the late Annalaura Segre - a distinguished Italian NMR expert with worldwide standing in NMR of polymers and, more recently, NMR applications in the area of cultural heritage preservation (it).

There were a total of 14 Plenary Lectures (45' each) delivered by F.Dardel (Uni Paris, France), D.Capitani (CNR Montelibretti, Italy) and L.Mannina (Uni Molise, Italy), A.Minoja (Bruker, Italy), B.Blümich (Uni Aachen, Germany), V.Busico and R.Cipullo (Uni Napoli, Italy), A.P.Sobolev (CNR Montelibretti, Italy), B.Brutscher (NCRS Grenoble, France), J.Brus (IMC Prague, Czech Republic), B.Heise (Varian, UK), R.Fattorusso (Uni Napoli, Italy), M.Fasano (Uni Insubria, Italy), R.Simonutti (Uni Milano-Bicocca, Italy), A.Mucci (Uni Modena, Italy) and A.Gräslund (Uni Stockholm, Sweden). These were complemented by shorter presentations (20-30' each) in two parallel sessions, grouped thematically into Biomolecular NMR (6), Methodology (3), NMR od Small Molecules (4), Relaxation/Low Resolution (4) and Materials Science (3).

The three talks I have enjoyed most were delivered by Bernhard Brutscher (Tools for fast multidimensional protein NMR spectroscopy), Jiri Brus (Arrangement and dynamics of molecular segments as seen by dipolar solid-state NMR) and Roberto Simonutti (Hyperpolarized Xenon NMR of Building Stones). This is, of course, a strictly personal selection related to my own background - and a suffered one, since there were several equally attractive candidates.

The meeting awarded a Gold Medal to the GIDRM member and educator Renzo Bazzo for his distinguished 30-years NMR carrier (in UK and Italy) and three prizes for best posters by young participants (M.Concistrè et al, S.Zanzoni et al, and S.Ellena et al).

The Group's management meeting has identified two major problems which need to be faced. The first one is the recent very low participation rate of Italians at international meetings like EUROMAR, ENC, etc. This is undoubtedly due to the current dismal economical situation of Italian Science - participation at meetings with registration fees of the order of 500 euros and/or held in remote places is all but impossible. I can, in fact, confirm that at the two major meetings mentioned above I have counted more Czechs than Italians (something I would have never expected to happen even just 10 years ago). Related is the "problem" of the forthcoming 2010 joint EUROMAR/ISMAR meeting in Florence. Considering the high registration fees, the fact that Firenze is an expensive town, and, the fact that GIDRM has no presence in the meeting's organizing committee, there is a very real risk that this huge Conference held in Italy will be all but deserted by the Italians.

A personal note: I have delivered a talk on behalf of myself and Carlos Cobas entitled Bayesian DOSY: A New Approach to Diffusion Data Processing.

The respective slides are now available for download.

|

September 8, 2008

Intro to DICOM

by Chris Rorden

| How to get started on DICOM

Asaf, a medical physics post-doc and a programmer from India (name withheld) is asking:

Dear Stan,

My boss told me to bone up on DICOM and start using it to code and test some new MRI image processing ideas he was thinking about. Google comes up with literally millions of references to it and I am quite confused about where I should start. Should we invest any money into it in order to save time?

Dear Asaf, you are a lucky guy since DICOM (Digital Imaging and Communications in Medicine) is a well documented public standard for encoding and sharing medical images which is quite easy to learn and supported by mountains of software (viewers, transcoders, libraries for programmers, etc). Moreover, the standard and much of the software are available online for free and some of it is even open-source. There is no need to buy anything!

This may be a bit surprising, since the standard is maintained by NEMA (the Association of Electrical and Medical Imaging Equipment Manufacturers). It regulates both the format of image data files (based on simple n-dimensional data grids with a choice of optional compression schemes) and the communication protocol (essentially TCP/IP) for image servers and the like. The admissible compression schemes, when used at all, include only well known public-domain standards like JPEG, Run-Length-Encoding and Lempel-Ziv-Welch. The strong points of the standard are:

= It is used uniformly for medical images of all kinds (MRI, CT, PET, ..., you name it).

= Its acceptance by various instrument manufacturers is total (No DICOM, no market!).

= It is free and open-source (including the admissible data compression schemes).

= Descriptive items, instrument settings, and the actual data are all equally important.

= All the image data and its indissoluble attributes reside in the same file.

= The standard supports multiple images (arrayed data sets) in the same file.

= Though designed primarily to encode and archive images, the standard can be used also for raw data sets such as k-space images. Indeed, I think that it should be extended also to other MR techniques (spectroscopy and relaxometry) where standardization of data files is still insufficient.

I have put a few links to DICOM in my lists of MRI software (completely free as well as commercial products). You can find them useful but, to make it short, my answer to your question consists in just two recommendations:

1) Visit the official DICOM homepage and download the free DICOM standards from their anonymously accessible FTP page (they support pdf and doc formats). You might also like to visit their DICOM Discussion Group. The standards are not particularly good for introductory learning, but they will come handy later.

2) For an in-depth introduction and a very extensive and practical collection of DICOM resource links, visit the page The DICOM standard of Chris Rorden (University of South Carolina). He has done a much better job on this than I ever could.

As a programmer, you might like the DICOM Cook Book for Implementations and Modalities available from a Philips anonymous ftp (they mark it "for internal use only" but, paradoxically, advertize it on the DICOM site; better grab it soon!). Another book which you can download for free is DICOM Structured Reporting by D.A.Clunie from PixelMed Publishing. Finally, if you can spend some money, consider the new book Digital Imaging and Communications in Medicine (DICOM): A Practical Introduction and Survival Guide by O.S.Pianykh.

|

August 19, 2008

2 Comments

ABQMR's NMR

Right to left:

Andrew McDowell

Eiichi Fukushima

Victor Esch

Dec.1, 2008:

nanoMR web site

is now operative.

| The smallest NMR ever built (?)

In the previous entry I wrote about the smallest ESR instrument ever built. It is therefore only fair that I mention a somewhat similar new NMR venture named NanoMR, based in Albuquerque (New Mexico). It is so new that they still do not have a web site (hence I cannot yet list them in my directory of MR Companies). Even their name is not yet stable - it oscillates between nanoMR, Nano Mr, Nano MR, and NanoMR. They are developing a miniature NMR device which - in combination with a biochemically selective method of marking bacteria with magnetic nano-particles - will make it possible to detect the onset of bacterial sepsis in blood in a few minutes rather than in many hours (sepsis is still a major scare in hospitals and in areas hit by natural disasters).

The new Company started appearing on the Net because of an influx of venture capital, involvement of personalities like Waneta Tuttle (serial entrepreneur) and Victor Esch (NanoMR President and CEO), and the announcement of a number of job openings (including a senior applied NMR scientist and an NMR engineer).

As soon as noticed that the "NMR brains" behind NanoMR were tagged ABQMR, I knew whom to contact to find out more about their NMR hardware: Eiichi Fukushima, ABQMR President, author of the most printed NMR book, and a photographer hosted on this site! He is in fact happily holding a mini-NMR system in his hand, right in the center of the photo accompanying a New Mexico Business Weekly article about a $5.5M capital injection into NanoMR. I contacted Eiichi and we had this exchange:

Stan: Hi Eiichi, you sure look great in the picture accompanying this story.

Is the gadget based on NMR?

Eiichi: Hello Stan,

The device is, indeed, an NMR device -- maybe one of the smallest NMR devices in the world. It has a 1T magnet with a 5mm bore and a microcoil wound on a capillary in the range of ~100-400 micron diameter. The original challenge was to figure out how to tune such a small coil at the relatively low frequency -- of course, high field microcoil work has been going on for some time with people like Tim Peck and Andrew Webb leading the way. This problem was solved by Andrew McDowell a few years ago (JMR 181, 181-190 (2006)).

Now, this low field microcoil NMR is combined with modern immunochemistry, of which I do not know much. These guys have gotten very good at attaching magnetic nanoparticles onto anything they want. These magnetic particles are chosen to have magnetic fields that "reach out" approximately of the order of the capillary's size. Then, when one comes through the coil, the signal of the background fluid is disturbed.

The idea is to have these nanoparticles attach themselves to a desired target, for example, a cancer cell or a bacteria. Then, when the target

goes through the coil, it can be counted, one by one. An embellishment is to have many particles attach to the target, not just one. Then you could distinguish the target from the isolated and unattached magnetic particles without having to separate them upstream.

The prototype magnet is about 5cm cubed and weighs less than 800 grams. It is a standard dipole magnet whose parts are machined as accurately as possible. So far, the magnet is being used unshimmed but we are thinking about putting in simple current shims. The magnet has enough iron in it so that it is virtually unaffected by external magnetic fields. We've stuck a NdFeB magnet on the outside of this magnet and the line shifts and broadens a bit but the signal is still looking good.

One of the unshimmed magnets, in combination with a 300 micron diameter sample with a volume of 21 nL, gave a linewidth of 0.24 ppm and a S/N of ~60! Isn't that neat? This isn't very high res NMR but you could think of some useful applications for such a handheld apparatus that costs about the same as an oscilloscope. By the way, this is being accepted for publication in Applied Magn.Resonance and it is also separate from the nanoMR activity.

Stan, I've got to go but I hope this is clear enough for you to get enthusiastic about our low-field and compact (and cheap) NMR development.

Cheers and best wishes, Eiichi.

Well, I admit that I am impressed. Of course, if I get it right, the 5 cm cube contains "only" the magnet and the NMR front-end, while most of the electronics is elsewhere. But still, the sensitivity, and the 0.24 ppm homogeneity! I would have expected >100 ppm !!!

For completeness sake, let me mention that Andrew McDowell, the Vice-President of ABQMR, is a finalist for the APS 2009 Prize for Industrial Applications of Physics.

COMMENTS:

I have received several interesting comments on this entry. It turns out that both NMR miniaturatization and the specific application (early detection of bacteria) are hot topic pursued independently in many places.

Chronologically, I have first (August 19) received a message from Carlos Cobas with a link to an Analytical Chemistry alert entitled The incredible shrinking NMR. The alert describes a development by the group of Ralph Weissleder at CMIR (Center for Molecular Imaging Research, Harvard) and it sounds so similar to the New Mexico NanoMR initiative that both me and Carlos were somewhat confused by it. Hence the following exchange with Eiichi (August 20,21):

Stan: Dear Eiichi, what can you tell me about this.

Are they related to NanoMR and ABQMR?

Eiichi: Hello Stan.

The technology developed by the Weissleder group at Mass General Hospital has the same aim as our technology that nanoMR is developing. However, the NMR principles used in the two schemes are different. Their's is a bulk method in that they use the change in T2 when the nanoparticles aggregate upon binding to the targets. Ours counts the target singly by their effect

on the background fluid as they come through the small capillary. Their sample and coil are 1-2 orders of magnitude larger than ours. Ours are tailored to be sensitive to the effect of the magnetic particles on the background signal and that means around 50-400 micron diameter whereas their's are of the order of a millimeter, I believe. Their technology is being commercialized by T2 Biosystems, another startup company with its NMR leader being Pablo Prado who went there from GE Security which evolved from Quantum Magnetics after it was bought by GE [editor: see this link]. So, we are competitors but, fortunately, with different methodologies.

In fact, T2 Biosystems offer their technology under the trade mark T2Dx instruments. It is evident that the idea of combining miniature NMR systems with magnetic nanoparticles is quite fashionable and promising at this moment.

To continue the story, on August 26 I have received the following e-mail from Bertram Manz from the Arbeitsgruppe Magnetische Resonanz (NMR Group) at IBMT (Fraunhofer-Institut für Biomedizinische Technik in St.Ingbert, Germany):

Hi Stan, I have read your latest blog about the smallest NMR with great interest. It is amazing how similar ideas can rise independently at the same time from different corners of the planet. Earlier this year we have published a paper in JMR describing a similar NMR system with comparable size and field strength. However, congratulations to Eiichi and his co-workers for achieving such a high spectral resolution. 0.42 ppm at 1 T is just amazing, since for our system we only got that sort of resolution in the simulations.

Best regards, Bertram

By itself, the idea of miniaturization of NMR is not a new concept, of course. People were trying it since decades but with the new permanent magnet materials and the new electronic chips and chip technologies, it is gaining impetus. I have even heard about MR front-ends (complete with miniature inside-out magnets) to be used to explore body cavities, somewhat like colonoscopes, angioscopes, etc. I am sorry for not having links or references - most of these attempts probably so far failed and did not reach general public (if you have any links to such mini "well-logging" NMR systems with medical applications, please let me know).

In this general context, I have also found very interesting a mini-MRI system presented at the IEEE ISSCC 2007 Conference by Long-Sheng Fan et al. from the National Tsing Hua University (Taiwan). The title of the presentation says it all: Miniaturization of Magnetic Resonance Microsystem Components for 3D Cell Imaging.

|

August 15, 2008

Mini-ESR

Early video of the

BENCH-TOP

prototype

| The smallest complete ESR instrument ever built

There is a new ESR Company on the horizon, named Active Spectrum (San Carlos, California)! Which, by itself, might be not so surprising since over the years we have seen a number of ESR upstarts most of which, alas, were rather short-lived (during the last three years, for example, a St.Petersburg initiative named SPIn Co. has appeared and went extinct). Commercially, sophisticated research-grade ESR has an overly limited market (one sells about one ESR instrument per ten NMR spectrometers) and more modest applications, though numerous, are scattered over a broad palette of fields, each of which requires a specific knowledge extending far beyond plain spin systems physics.

But something tells me that the Active Spectrum guys have got a number of things right and might actually herald a new applied ESR era.

First, they have developed the world's first miniature ESR spectrometer (see the picture on the left) whose size (2.25 x 1.5 inches) and cylindrical shape place it squarely in the hand-held category. Though they call it timidly an ESR sensor, it is a full-fledged CW-ESR spectrometer, complete with a permanent magnet, field modulation, innovative tunable microwave source and cavity, MW detector, field-frequency lock, analog signal conditioning, etc !!! Regarding the traditional ESR specs, here is an excerpt from a Skype chat I had with James R.White, one of the White brothers (James and Chris) behind the Active Spectrum initiative:

Stan: Tell me a bit about the mini-sensor. Is it really a complete instrument?

James: Yes. It has a micro-controller, memory, power supply, sweep coil, cavity, permanent magnet etc. We set it to sweep the g factor from 1.8 to 2.2 but you can do a shorter interval. The homogeneity of the magnetic field is not quite as high as for the big instruments... it is around 0.5 Gauss now but we are working to get to 0.1 Gauss. The permanent magnetic field is at 1170 Gauss... so the homogeneity is 100 ppm.

S: How do you sweep the g-factor? Have you got a frequency lock incorporated?

J: Yes, we have there a feedback loop that automatically locks the frequency to the cavity resonance. It compensates for drift etc.

S: And you cram it all into a 2.25" x 1.5" cylinder? Is there more hardware at the PC side.

J: No that's it. We have software on the PC which controls all the settings and logs the data.

S: Amazing! What is the sample size and how is the sample loaded?

J: 125 micro-liters (without the tubing). We have peristaltic pumps to load it.

S: I see, but can one also insert a sample in a tube?

J: Not in this design - this design is made for continuous flow. We are working on a new, aqueous flow chamber which will accept also a quartz tube.

S: Ok, so both the mini and the table top are flow-through, right? Does it mean that all tuning is fully automatic (I mean like dip location and conditioning)?

J: Yes. It all happens seamlessly. There is actually no way to intervene.

S: Could be a nice ESR sensor for HPLC.

J: Sure. For PCR tests, for example. I can send you a patent by a chemist called Albert Bobst who has a method of using DNA strands tagged by spin labels. Great way to do genetic testing. It's a nice application you could mention.

S: Yes, I will.

Second, the Mini-ESR functions as a black-box accessory for a PC. To give it a "front panel" and start using it, all you need to do is plug its USB (or other) interface cable into your PC's USB port and run an application-specific software. Which is exactly what I have in mind when I talk about virtual instrumentation and about the amazing new possibilities made possible by recent advances in electronics. I can easily imagine tens of applications in most disparate fields (medical, pharmaceutical, food industry and quality control, lubricants, radiation dosimetry, oil prospecting, ..., and plain chemistry labs). While the hardware can be essentially the same in all cases, the respective softwares and User interfaces might be dramatically different - from as simple as a PASSED/FAILED panel (green/red) all the way up to a "classical" look af an ESR spectrometer. Needles to say, of course, the development of each individual application is a non-trivial task and can require a lot of highly qualified brainpower (I hope the Company is aware of this).

Third, it is low-cost! This, together with a cache of viable applications, is the prerequisite for opening markets in which ESR is all but unknown and where traditional instruments would be way too costly. And it opens also the doors to an affordable educational tool for undergraduate and graduate students, and to a routine ESR instrument for every chemical lab, including those in developing Countries. Since the Company has just started, the end-User price of the Mini-ESR is still somewhat fluid, but it is likely to stabilize at an extremely competitive level. Moreover, James says that opening of high-volume applications could bring the price down by a substantial factor ... And the Company offers also a larger, table-top instrument at an attractive price.

Fourth, the Mini-ESR is extremely robust. In their first real-life application (see below) they mount it on a large motor vehicle and drive it around for months. Could you have ever imagined a complete ESR instrument permanently attached to a truck engine? This degree of robustness, involving not just vibrations but also temperature excursions, makes it eminently suitable for all kinds of industrial applications.

Fifth, they have started from an actual application, originally developed to solve a specific problem for the US Army. This is a very sensible business approach and the results, shown below, illustrate best the potential of the Mini-ESR device.

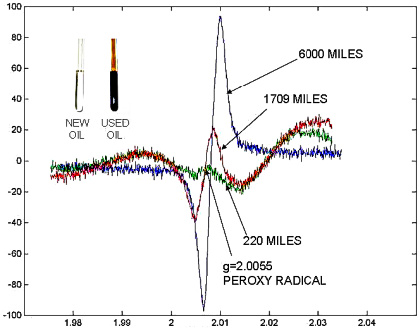

ESR spectra of motor oil at different stages of wear

These spectra were acquired by Mini-ESR mounted on a large Diesel motor in a flow-through arrangement which permits continuous monitoring of the oil deterioration - an important prerequisite to oil consumption optimization. This particular application is very important both economically and ecologically. Consider just the fact that a large cargo ship consumes well over 1000 liters of motor oil a day! Click here for more details

|

August 8, 2008

MRI:

How comes we

love it so much,

trust it so much,

want it so much?

Does seeing mean

so much more

than plain,

simple knowing?

| A crop of new MR books

We seem to be in the middle of a peak of editorial frenzy in NMR, MRI and even ESR. Here is a list of books which left presses in the last four months or are slated for an imminent release within August:

NMR Spectroscopy:

Halfway between NMR and MRI:

MRI - a mere selection, excluding half a dozen purely medical tomes:

- Bagley R., Gavin P., Tucker R.,

Small Animal Mri (Blackwell Publishing).

- Deshmukh A.V.,

Functional Magnetic Resonance Imaging: Novel Transform Methods

(Alpha Science International, Ltd).

This is a very intriguing title!

- Holodny A.I.,

Functional Neuroimaging: A Clinical Approach (Informa Healthcare).

- Huettel S.A., Song A.W., McCarthy G.,

Functional Magnetic Resonance Imaging (Sinauer Associates, 2nd Edition).

So popular that it might be already sold out again !!!

- Lazar N.A.,

The Statistical Analysis of Functional MRI Data (Springer). This book is crammed with heavy math, but it addresses eminently important questions!

- Joyce K.A.,

Magnetic Appeal: MRI and the Myth of Transparency (Cornell University Press).

An interesting reflection about our image-centered diagnostic attitudes.

- As expected, functional MR (fMRI) dominates the new titles.

But have a look here (in the Year 2008 section) for more titles.

Electron spin (ESR/EPR):

BTW, my lists of the NMR, MRI and ESR books ever published have reached the totals of 587, 319, and 134, respectively, not counting the related sections. That brings the grand total to well over 1000, worth throwing a party!

|

July 31, 2008

| Spotting explosives and drugs: an Euromar 2008 update

The many sessions dedicated to spotting explosives and drugs at the last Euromar meeting were actually intended as a separate Workshop with just a loose formal connection to the main meeting. Since I have written about this topic before (see this blog's January entry on Spin Resonance and Warfare). I have attended a number of the talks and read most of the relevant posters. Unfortunately, none of the two pioneers who have opened this ex cold-war area of magnetic resonance applications (A.N.Garroway in the West Block and V.S.Grechishkin in the East Block) has showed up (Garroway was represented by Joel Miller and Grechishkin defaulted). Wrapped in a few words, my personal impressions are:

(a) While the attention on military and post-military applications like de-mining remains constant, civilian security applications are on an uprise. This regards mostly detection of explosives and drugs at mass transit checkpoints (airports, seaports, rail stations, ...).

(b) Years ago there was a widespread hope that reasonably remote detection (a few meters) might be possible for at least some explosives, but today nobody even mentions it.

(c) For near detection (luggage, shoes, ...), there has been a marked increase in sensitivity due to new sequences (use of multiple refocusing pulses) and to polarization transfer techniques. This is an ongoing process which might boost sensitivity further by a factor of ~10 or more, especially for explosives with low-frequency NQR transitions. Conceptually, of course, boosting the signal either by generating multiple CPMG-like echoes or by means of cross-polarization is nothing new in NMR, but in NQR there are some theoretical differences due to the fact that we look at spin > 1/2 nuclides. As a result we have, for example, pseudo-inversion pulses, CPMG's are not quite what we are used to in proton NMR, etc. It took a bit of theoretical and experimental work to put these things straight.

(d) There is now a strong interest in new sensors which, at the low magnetic fields characteristic of these applications, might yet replace the classical induction coils and boost sensitivity by orders of magnitude. This includes the SQUIDS (superconducting quantum interference devices), but also sensors based on the new GMR (giant magneto-resistance) effect, and perhaps also those based on electric-field detection.

As one participant has put it, though there was no fresh revolutionary breakthrough, looking back at what has been done in this field in the last ten years, the next decade looks exciting.

|

July 30, 2008

EUROMAR

EUROMAR 2008

| Glimpses of EUROMAR 2008

I have promised a little report on the 2008 EUROMAR Conference which was held July 6-11 in St.Petersburg (Russia). I am sorry for being late, but let me try (better late than ever). As always, my perspective is personal and geared towards physical phenomena, so if you are in a different branch, don't criticize me too much.

This annual meeting is the largest and the most important one on the European continent and it is also the major Groupement Ampere venture. It was attended by about 500 participants and held in several spledid ceremonial halls of the St.Petersburg's State University and of the adjacent Russian Academy of Sciences. Organization was not completely flawless (vendors, in particular, complained about having been relegated to an isolated location) but satisfactory, with lunches served in the nearby Restaurant Academia, one of the best in town. The meeting probably suffered a bit from the fact that there was a large FEBS & IUBMB Conference with a strong NMR agenda in Athens just before it and the important MRPM meeting in Boston just after it. But that is a broader problem for which nobody has a magic solution.

This year, the program (in particular the posters) was to some extent influenced by national scientific themes which, of course, is only natural. In particular, I had the impression that both the bio- and the nano- applications were somewhat less omnipresent than usual (good for me), while there were two prominent threads, one dedicated to the ESR spectroscopy (discovered in 1944 by E.K.Zavoisky in Kazan), and the other to the role of NQR and NMR in spotting explosives - an ever more important, ex-military field with an old tradition at Kaliningrad Univerity under V.S.Grechishkin (I will write more about this in my next entry). Exotic and frontier ideas and lines of research of physical nature, always a staple of Russian scientific culture, were also welcomed and given a non-exotic visibility.

The EUROMAR conference was preceded by a two-day summer school on Nuclear Magnetic Resonance in Condensed Matter (NMRCM 2008) which is now an annual St.Petersburg NMR event. To some extent, its program anticipated many of the most salient topics of EUROMAR (other than the ESR and explosives spotting threads). The programs of both meetings are available at the EUROMAR site.

Despite of the slight bias mentioned above, the breadth of the topics covered by EUROMAR 2008 was amazing. A dozen plenary lectures were complemented by fourteen dedicated sessions (some with multiple slots, each slot amounting to 4 lectures), regrettably but unavoidably running in parallel in three different lecture halls. But let me be a bit more specific and chronological:

The meeting, chaired by Geoffrey Bodenhausen, was opened by Olga Lapina and, after a series of local welcomes, proceeded with the presentation of the Raymond Andrew Prize award to Boaz Shapira and the Russel Varian Prize award to Alex Pines. Both awardees presentated a talk (Spatial Encoding in NMR Spectroscopy and Some Recollections of the Early Days of High-Resolution Solid-State NMR, respectively). Unfortunately, Alex Pines was pinned down in San Francisco by unexpected health problems, but his interesting presentation was delivered from a video.

The various sessions included:

- A Workshop on NMR and NQR in spotting explosives comprising 6 slots.

- Single-slot sessions on ESR Methods and Paramagnetic Systems and ESR Applications.

- A two-slots session on Methods for Biosolids.

- Two more single-slot sessions regarding solid-state NMR

(Spin Dynamics in Solids and Solid State Physics and Polymers).

- Single-slot sessions on Imaging, Relaxation, Bio-Macromolecules in Liquids, Molecular Dynamics and Other Computational Aspects, Applications in Catalysis, Enhanced Magnetic Resonance, Drug Discovery, and Frontiers.

The concluding note of the conference was set by the presentations of Kirill V.Kovtunov, Eugenio Daviso, and Giuseppe Sicoli, all winners of the Wiley Prize.

Compared with the 49th ENC (the North-American counterpart of EUROMAR) I have noticed, apart from the emphases on ESR and on spotting explosives, a rather puzzling absence of the frontier category known as sparse, or compressed, data sampling.

To conclude, allow me a few very personal notes. I have presented two posters at the meeting, one with Carlos Cobas of Mestrelab Research about our grand roadmap towards semi-automatic use of 1H-NMR in verification and elucidation of molecular structures (click here), and one on the properties of spin radiation (click here). Since true spin radiation is still to be detected and some of its properties are totally hypothetical, it is only logical that the latter poster was listed in the Frontiers category. Finally, the talk-and-poster I have enjoyed most was the one delivered by Robert J.Prance et al from the University of Sussex, UK, entitled Development of Electric Field NMR. It is amazing that using simple, needle-like electrometric sensors to detect the electric field associated with the precessing sample magnetization, they can obtain sensitivity comparable to that of conventional induction coils (click here). I am willing to bet that this will lead to major breakthroughs.

|

|

Archive

| For a complete list of all entries since 2005, see the running INDEX

|

|

Visitor #

ADVERTISE with us

NMR, MRI, ESR, NQR

Companies

Societies

Centres & Groups

Journals & Blogs

References

Free Texts

History

Links

BOOKS Lists

MATH | SOFTWARE

PHYSICS | CHEMISTRY

ELECTRONICS | DSP

WWW | Patents & IP

SPECTROSCOPY

MRI | NMR | ESR

Instruments

ARTICLES

Hebel-Slichter effect

MR Antenna Theorem

NMR Dead Time

One-Page MR Primer

K-space and MRI

S/N Perspectives

OTHER

SI Units &

Dimensions

Physics constants

Science Links

Support this site!

SHOP from here:

COMPUTERS:

Deals

Bestsellers

Accessories

Calculators

This page is

SPONSORED by:

Random offers:

|